Natural language processing (NLP) is a subfield of artificial intelligence and computer science that deals with the interactions between computers and human languages. The goal of NLP is to enable computers to understand, interpret, and generate human language in a natural and useful way. This may include tasks like speech recognition, language translation, text summarization, sentiment analysis, and more. NLP is a rapidly growing field with a wide range of applications, especially in areas like customer service, language education, and information retrieval.

In this article, we’ll explore some examples of NLP using spaCy, a popular, open source library for NLP in Python. Let’s get started!

Jump ahead:

- How does natural language processing work?

- How does spaCy work?

Tokenizertaggerparser- Named entity recognition

How does natural language processing work?

A peculiar example of NLP is the omnipresent ChatGPT. ChatGPT is an extensive language model that has been trained on a vast dataset of text from the internet and can generate text similar to the text in the training dataset. It can also answer questions and perform other language-based tasks, like text summarization and language translation.

As you may notice, ChatGPT is actually the composition of two subsystems. One is in charge of NLP, which understands the user’s prompt, and the other handles natural language generation (NLG), which will assemble the answers in a form understandable by humans.

How does spaCy work?

spaCy is designed specifically for production use, helping developers to perform tasks like tokenization, lemmatization, part-of-speech tagging, and named entity recognition. spaCy is known for its speed and efficiency, making it well-suited for large-scale NLP tasks.

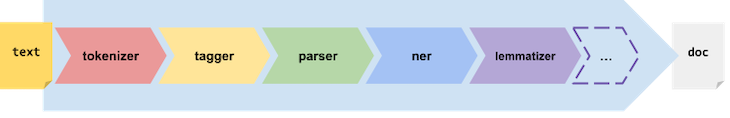

NLP is a process that can efficiently be represented as a pipeline of the following steps. Each of these steps is a specific algorithm whose output will be the input for the proceeding one.

spaCy uses the following basic pipeline:

| Name | Description |

|---|---|

Tokenizer |

Segment text into tokens |

tagger |

Assign part-of-speech tags |

parser |

Assign dependency labels |

ner |

Detect and label named entities |

lemmatizer |

Assign base forms |

In the GitHub repository, you can find an example for each of the steps. The file is named after the pipeline component. Now, we’ll describe the code for each component and the output. For the sake of clarity, we’ll use the same text to better understand the kind of information that each component of the pipeline will extract.

In each source, the entry point to the functionalities of the library is the nlp object. The nlp object is initialized with the en_core_web_sm, which is a small, English pipeline pre-trained on web excerpts, like blogs, news, comments, etc., including vocabulary, syntax, and entities.

The execution of the nlp default pipeline with the specified pre-trained model will populate different data structures within the doc object, depicted on the right in the figure above.

Choosing a pre-trained module may be crucial for your application. To facilitate the decision, you can use the boilerplate generator to choose between accuracy and efficiency. Accuracy is beneficial for the size and complexity of the model, but this will means a slower pipeline.

Tokenizer

spaCy’s Tokenizer allows you to segment text and create Doc objects with the discovered segment boundaries. Let’s run the following code:

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Apple is looking at buying U.K. startup for $1 billion.")

print([(token) for token in doc])

The output of the execution is the list of the tokens; tokens can be either words, characters, or subwords.

python .\01.tokenizer.py [Apple, is, looking, at, buying, U.K., startup, for, $, 1, billion, .]

You might argue that the exact result is a simple split of the input string on the space character. But, if you look closer, you’ll notice that the Tokenizer, being trained in the English language, has correctly kept together the “U.K.” acronym while also correctly, separated the closing period.

tagger

The tagger component will take care of separating and categorizing, or tagging, the parts-of-speech in the input text:

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Apple is looking at buying U.K. startup for $1 billion.")

print([(w.text, w.pos_) for w in doc])

For each word in the doc object, the nlp component populates the field pos_, which will contain the list of parts present in the text:

> python .\02.tagger.py

[('Apple', 'PROPN'), ('is', 'AUX'), ('looking', 'VERB'), ('at', 'ADP'),

('buying', 'VERB'), ('U.K.', 'PROPN'), ('startup', 'NOUN'), ('for', 'ADP'),

('$', 'SYM'), ('1', 'NUM'), ('billion', 'NUM'), ('.', 'PUNCT')]

The possible parts of speech are described in the following table and, per usual, they heavily depend on the language of the text:

| Abbreviation | Part of speech |

|---|---|

| ADJ | Adjective |

| ADP | Adposition |

| ADV | Adverb |

| AUX | Auxiliary |

| CCONJ | Coordinating conjunction |

| DET | Determiner |

| INTJ | Interjection |

| NOUN | Noun |

| NUM | Numeral |

| PART | Particle |

| PRON | Pronoun |

| PROPN | Proper noun |

| PUNCT | Punctuation |

| SCONJ | Subordinating conjunction |

| SYM | Symbol |

| VERB | Verb |

| X | Other |

parser

The parser component will track sentences and perform a segmentation of the input text. The output is collected in some fields in the doc object. For each token, the .dep_ field represents the kind of dependency and the .head field, which is the syntactic father of the token. Furthermore, the boolean field .is_sent_start is true for tokens that start a sentence:

import spacy

`from spacy import displacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Apple, a big tech company, is looking at buying U.K. startup for $1 billion. Investors are worried about the final price.")

for token in doc:

print(token.text, token.head)

for token in doc:

if (token.is_sent_start):

print(token.text, token.is_sent_start)

displacy.serve(doc, style="dep")

The code is slightly longer because we used a longer input text to show how the segmentation works:

> python .\03.parser.py Apple looking , Apple a company big company big tech company company Apple , Apple is looking looking looking at looking buying at final price price about . are Apple True Investors True

The first part of the output reports the field .head for each token, and the last two lines just represent the two tokens that actually starts a sentence. The spaCy library also provides a means to visualize the dependency graph. The displacy component is the entry point to access these functionalities.

In particular, the last line of the code above will visualize the dependency graph shown below by pointing your browser at http://127.0.0.1:5000:

Named entity recognition

The named entity recognition (NER) component is a powerful step towards information extraction. It will locate and classify entities in text into categories, like the names of persons, organizations, locations, expressions of times, quantities, monetary values, percentages, and more:

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("Apple is looking at buying U.K. startup for $1 billion.")

for ent in doc.ents:

print(ent.text, ent.label_)

spacy.displacy.serve(doc, style="ent")

The code above will produce:

> python .\04.ner.py Apple ORG U.K. GPE $1 billion MONEY

In the result, it’s clear how effectively the categorization works. It correctly categorizes the U.K. token, regardless of the periods, and it also categorizes the three tokens of the string $1 billion as a single entity that indicates a quantity of money.

The categories vary on the model, and to print which categories are recognized you can use:

import spacy

nlp = spacy.load("en_core_web_sm")

print(nlp.get_pipe("ner").labels)

As shown for the parser, it’s possible to have a visualization of the named entity recognized in the text. The last line of code, once again by using displacy, will show the following representation of the named entities embedded in the text:

Conclusion

In this article, we’ve just scratched the surface of the powerful architecture of spaCy.

spaCy is a framework to host pipelines of components extremely specialized for NLP tasks. The behaviors and the performances of each of such components depend on the “quality” of the model, the en_core_web_sm in our examples.

The quality of the model depends on the size of the dataset used to train it, pre-trained models are good for most of the cases but, of course, for specific domains of application you may think about train your own model.

The post A guide to natural language processing with Python using spaCy appeared first on LogRocket Blog.

from LogRocket Blog https://ift.tt/P0kBdOq

Gain $200 in a week

via Read more